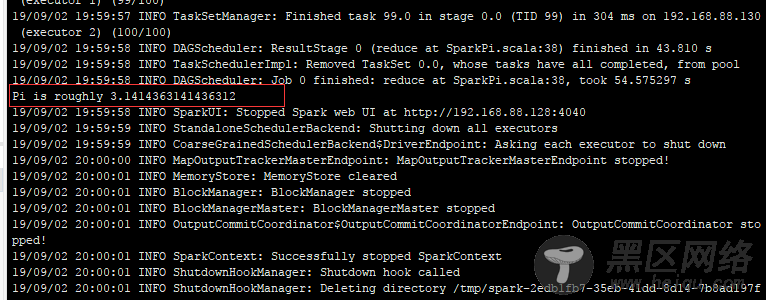

[root@hdp-01 spark]# bin/spark-submit --master spark://hdp-01:7077 --class org.apache.spark.examples.SparkPi --executor-memory 1G --total-executor-cores 1 examples/jars/spark-examples_2.11-2.2.0.jar 100

[root@hdp-01 spark]# bin/spark-submit --class org.apache.spark.examples.SparkPi --master spark://hdp-01:7077 examples/jars/spark-examples_2.11-2.2.0.jar 100

参数说明: --master spark://hdp-01:7077 指定Master的地址 --executor-memory 1G 指定每个worker可用内存为1G --total-executor-cores 1 指定整个集群使用的cup核数为1

集群模式执行spark程序

[root@hdp-01 spark]# bin/spark-submit --class org.apache.spark.examples.SparkPi --master spark://hdp-01:7077,hdp-04:7077 --executor-memory 1G --total-executor-cores 2 examples/jars/spark-examples_2.11-2.2.0.jar 100

spark shell中编写WordCount程序启动hdfs 上传单词文件到hdfs中

[root@hdp-01 ~]# start-all.sh [root@hdp-01 ~]# vi spark.txt helo java hello spark hello hdfs hello yarn yarn hdfs [root@hdp-01 ~]# hadoop fs -mkdir -p /spark [root@hdp-01 ~]# hadoop fs -put spark.txt /spark

spark shell 执行任务

scala> sc.textFile("hdfs://hdp-01:9000/spark").flatMap(split(" ")).map((,1)).reduceByKey(+).collect

将结果输出到hdfs中

scala> sc.textFile("hdfs://hdp-01:9000/spark").flatMap(.split(" ")).map((,1)).reduceByKey(+).saveAsTextFile("hdfs://hdp-01:9000/outText") 2019-06-25 Comments

Linux公社的RSS地址:https://www.linuxidc.com/rssFeed.aspx